Analyzing the Impact of COVID-19 on Frontline Workers Using Machine Learning

The recent pandemic upturned many lives and changed the way society interacts. The whole world was encouraged to stay in lockdown– except for the frontline and essential workers. As the name suggests, essential workers are those whose jobs are deemed “essential”, without whom the core functions of the economy and society would not function. Among these essential services are the frontline workers, who have to provide their services in person. Frontline workers include, but are not limited to, doctors, nurses, cashiers, fast food workers, agricultural workers, and truck drivers, etc.

OVERVIEW

For this, we undertook a project to study the impact of COVID-19 on frontline workers by analyzing the posts that these frontline workers put on social media. The reason for the same was to analyze the intangible impact — psychological, physical and material that this crisis was having on these warriors. For this purpose, a novel dataset consisting of posts from various frontline workers was extracted from social media platform, Reddit.

Through the pandemic, frontline workers have been the most impacted because of the nature of their job, poor management, the lack of benefits or monetary compensation, anxiety due to the pandemic, anxiety because of possible job loss, and frustration towards society.

https://www.kmea.org/kam/b-v-c1.html

https://www.kmea.org/kam/b-v-c2.html

https://www.kmea.org/kam/b-v-c3.html

https://www.kmea.org/kam/b-v-c4.html

https://www.kmea.org/kam/b-v-c5.html

https://www.kmea.org/kam/b-v-c6.html

https://www.kmea.org/kam/b-v-c7.html

https://www.kmea.org/kam/b-v-c8.html

https://www.kmea.org/kam/b-v-d1.html

https://www.kmea.org/kam/b-v-d2.html

https://www.kmea.org/kam/b-v-d3.html

https://www.kmea.org/kam/b-v-d4.html

https://www.kmea.org/kam/b-v-d5.html

https://www.kmea.org/kam/b-v-d6.html

https://www.kmea.org/kam/b-v-h1.html

https://www.kmea.org/kam/b-v-h2.html

https://www.kmea.org/kam/b-v-h3.html

https://www.kmea.org/kam/b-v-h4.html

https://www.kmea.org/kam/b-v-h5.html

https://www.kmea.org/kam/b-v-h6.html

https://www.kmea.org/kam/b-v-h7.html

https://www.kmea.org/kam/b-v-h8.html

https://www.kmea.org/kam/b-v-j1.html

https://www.kmea.org/kam/b-v-j2.html

https://www.kmea.org/kam/b-v-j3.html

https://www.kmea.org/kam/b-v-j4.html

https://www.kmea.org/kam/b-v-j5.html

https://www.kmea.org/kam/b-v-j6.html

https://www.kmea.org/kam/b-v-l1.html

https://www.kmea.org/kam/b-v-l2.html

https://www.kmea.org/kam/b-v-l3.html

https://www.kmea.org/kam/b-v-l4.html

https://www.kmea.org/kam/b-v-l5.html

https://www.kmea.org/kam/b-v-l6.html

https://www.kmea.org/kam/b-v-m1.html

https://www.kmea.org/kam/b-v-m2.html

https://www.kmea.org/kam/b-v-m3.html

https://www.kmea.org/kam/b-v-m4.html

https://www.kmea.org/kam/b-v-m5.html

https://www.kmea.org/kam/b-v-m6.html

https://www.kmea.org/kam/b-v-m7.html

https://www.kmea.org/kam/b-v-n1.html

https://www.kmea.org/kam/b-v-n2.html

https://www.kmea.org/kam/b-v-n3.html

https://www.kmea.org/kam/b-v-n4.html

https://www.kmea.org/kam/b-v-n5.html

https://www.kmea.org/kam/b-v-n6.html

https://www.kmea.org/kam/b-v-n7.html

https://www.kmea.org/kam/b-v-n8.html

https://www.kmea.org/cod/At-g-v-j01.html

https://www.kmea.org/cod/At-g-v-j02.html

https://www.kmea.org/cod/At-g-v-j03.html

https://www.kmea.org/cod/At-g-v-j04.html

https://www.kmea.org/cod/At-g-v-j05.html

https://www.kmea.org/cod/Dnp-g-v-j01.html

https://www.kmea.org/cod/Dnp-g-v-j02.html

https://www.kmea.org/cod/Dnp-g-v-j03.html

https://www.kmea.org/cod/Dnp-g-v-j04.html

https://www.kmea.org/cod/g-v-b2.html

https://www.kmea.org/cod/g-v-b3.html

https://www.kmea.org/cod/g-v-b4.html

https://www.kmea.org/cod/g-v-b5.html

https://www.kmea.org/cod/g-v-b6.html

https://www.kmea.org/cod/g-v-b7.html

https://www.kmea.org/cod/g-v-b8.html

https://www.kmea.org/cod/g-v-b9.html

https://www.kmea.org/cod/g-v-c1.html

https://www.kmea.org/cod/g-v-c2.html

https://www.kmea.org/cod/g-v-c3.html

https://www.kmea.org/cod/g-v-c5.html

https://www.kmea.org/cod/g-v-c6.html

https://www.kmea.org/cod/g-v-c7.html

https://www.kmea.org/cod/g-v-c8.html

https://www.kmea.org/cod/g-v-c9.html

https://www.kmea.org/cod/g-v-h1.html

https://www.kmea.org/cod/g-v-h2.html

https://www.kmea.org/cod/g-v-h3.html

https://www.kmea.org/cod/g-v-h4.html

https://www.kmea.org/cod/g-v-h5.html

https://www.kmea.org/cod/g-v-h6.html

https://www.kmea.org/cod/g-v-h7-j201.html

https://www.kmea.org/cod/g-v-h7-j202.html

https://www.kmea.org/cod/g-v-h7-j203.html

https://www.kmea.org/cod/g-v-h7-j204.html

https://www.kmea.org/cod/g-v-h7-j205.html

https://www.kmea.org/cod/g-v-h7.html

https://www.kmea.org/cod/g-v-h8.html

https://www.kmea.org/cod/g-v-h9.html

https://www.kmea.org/cod/g-v-j1.html

https://www.kmea.org/cod/g-v-j2.html

https://www.kmea.org/cod/g-v-j3.html

https://www.kmea.org/cod/g-v-j4.html

https://www.kmea.org/cod/g-v-j5.html

https://www.kmea.org/cod/g-v-j6.html

https://www.kmea.org/cod/g-v-j7.html

https://www.kmea.org/cod/g-v-j8.html

https://www.kmea.org/cod/g-v-j9.html

https://www.kmea.org/cod/g-v-v1.html

https://www.kmea.org/cod/g-v-v2.html

https://www.kmea.org/cod/g-v-v3.html

https://www.kmea.org/cod/g-v-v4.html

https://www.kmea.org/cod/g-v-v5.html

https://www.kmea.org/cod/g-v-v6.html

https://www.kmea.org/cod/g-v-v7.html

https://www.kmea.org/cod/g-v-v9.html

https://www.kmea.org/cod/Gof-N-v-S-JT204.html

https://www.kmea.org/cod/l-v-q1.html

https://www.kmea.org/cod/l-v-q2.html

https://www.kmea.org/cod/l-v-q3.html

https://www.kmea.org/cod/l-v-q4.html

https://www.kmea.org/cod/l-v-q5.html

https://www.kmea.org/cod/l-v-q6.html

https://www.kmea.org/cod/l-v-q9.html

https://www.kmea.org/cod/l-v-w1.html

https://www.kmea.org/cod/l-v-w2.html

https://www.kmea.org/cod/l-v-w3.html

https://www.kmea.org/cod/l-v-w4.html

https://www.kmea.org/cod/l-v-w5.html

https://www.kmea.org/cod/l-v-w6.html

https://www.kmea.org/cod/l-v-w7.html

https://www.kmea.org/cod/l-v-w8.html

https://www.kmea.org/cod/l-v-w9.html

https://www.kmea.org/cod/NHK-BS1-g-v-z01.html

https://www.kmea.org/cod/NHK-BS1-g-v-z02.html

https://www.kmea.org/cod/NHK-BS1-g-v-z03.html

https://www.kmea.org/cod/NHK-BS1-g-v-z04.html

https://www.kmea.org/cod/NHK-BS1-g-v-z05.html

After understanding the general emotions of these workers through their posts, a classifier was developed to detect the users who were at enhanced risk to severe mental distress (such as depression or anxiety leading to suicidal ideation), using models pre-trained on social media posts.

Different models were tested for this purpose, with different features (statistical, syntactic, word and sentence embeddings), and their overall accuracy was compared and contrasted. These trends were visualized using Tableau, leading to interesting conclusions.

DATA COLLECTION

Social platforms make it easier to find like-minded peers, making interaction more likely. Reddit is one such platform, which allows its users to generate content anonymously, with only a username for identity.

For this research, posts and comments were extracted using the Pushshift.io API and communities (“subreddits”) meant for frontline workers were targeted.

From these subreddits, only posts related to coronavirus were extracted, using a keyword-specific search like coronavirus, covid19, corona, wuhan, china flu, covid, mask, social distancing, and pandemic. Related posts between December 1st 2019 and August 24th 2020 were obtained. A new novel dataset with more than 10,000 entries from frontline workers was created.

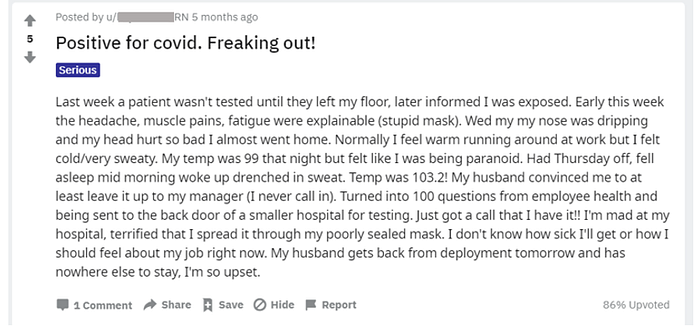

Post from r/Nurse

SENTIMENT ANALYSIS

For this analysis, the text body as well as the title of the posts was used, and the overall sentiment was extracted using VADER and TextBlob.

On the text posts, VADER displayed the overall sentiment — positive, negative, or neutral — for the entire collection of sentences. There is a fourth value, ‘compound’ which gives the overall sentiment of the text.

With TextBlob, the polarity and subjectivity of the text posts was found, with polarity ranging from -1 to +1, and subjectivity between 0 and 1. Polarity is related to emotions; a high polarity score implies that the text is positive while a low polarity implies negativity.

MENTAL DISTRESS DETECTION

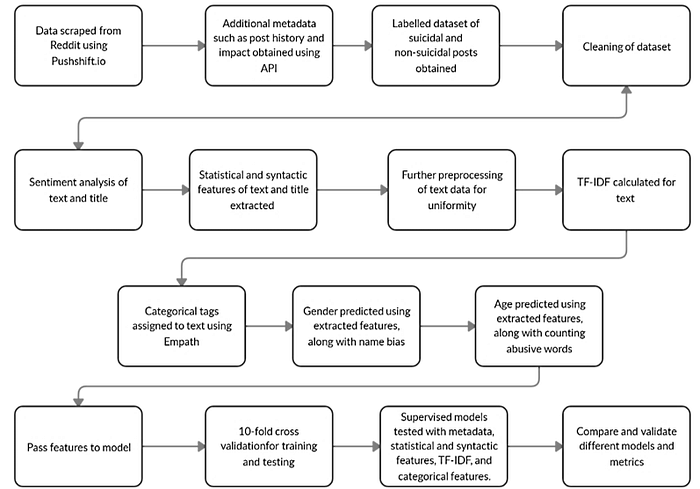

A balanced dataset from Reddit comprising of more than 100,000 posts, prelabelled as suffering from extreme mental distress and not possessing such tendencies was used for building the model.

This dataset was created by extracting posts from r/SuicideWatch, a community where users generally post about their suicidal thoughts. Common themes involve discussing why they want to commit suicide and talking about previous attempts.

Example of a mentally stressed and non-mentally stressed post respectively, from Reddit

Non-suicidal posts, i.e. posts with no suicidal tendencies, were extracted from other popular subreddits, r/books, r/jokes, r/legaladvice, r/casualconversation, and r/college, to create a roughly balanced dataset.

Using this dataset, models were created to detect differences in how the users suffering from severe mental health issues may have different posting patterns and habits compared to other users.

1. LGBM (Light Gradient Boosted Machine)

For building the supervised learning model, apart from the features obtained from sentiment analysis, statistical, syntactic, and categorical features were also extracted.

Statistical: Using simple splitting of the texts and by examining for certain words and values, the number of words, characters, sentences, average sentence length, stop-words, special characters, alphabets, and numbers are obtained for both the title and the text following it.

Additionally, the total number of derogatory words was counted.

Metadata: The data extracted about the post were used, such as the score, number of comments, and the date and time of publishing.

Syntactic: Parts-of-Speech [POS] tagging was done on the text body to capture grammatical variations. The text was converted to lowercase tokens, and each token was tagged. The total number of these tags were counted.

To do this, TextBlob was used. Each text body was read and converted into a “blob”. This blob could then be read word by word. Each word is then assigned the corresponding POS tag.

Categorical: The text is classified according to different categories such as ‘family’, ‘health’, ‘death’, ‘disgust’, and ‘joy’, and the differences between mentally stressed and non-mentally stressed posts are noted. For instance, posts containing suicidal ideation tend to contain more negative emotions, with posts three times more likely to contain words relating to fear, death, violence, and shame.

These categories are obtained using Empath. Empath generates lexical categories from the seed word, using neural embedding with a skip-gram network to identify related words. 196 categories are obtained for this dataset.

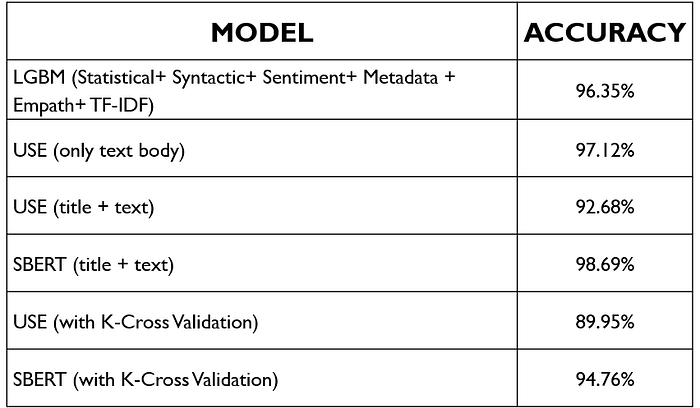

It was observed that these features, along with the TF-IDF scores of the text posts, gave the best results with LGBM, with an accuracy of 96.35%.

This was then used to detect the mental health tendencies of the frontline workers, based on their text posts. A binary classification was used, with 1 indicating low mental health and higher suicidal tendencies, and 0 indicating stable mental health.

2. USE (Universal Sentence Encoder)

The Universal Sentence Encoder encodes text into high dimensional vectors that can be used for text classification, and is optimized for sentences, phrases and short paragraphs.

The body of the prelabelled mental distress dataset submissions were encoded to output a 512-dimensional vector. This was then inputted into a neural network, and achieved an accuracy of 97.12% with 100 epochs.

To improve upon the model, both the title and text were considered for prediction. The title and text body were concatenated and embedded to obtain the 512-dimensional vector using the Universal Sentence Encoder. However, this model achieved a lower accuracy of 92.68%.

3. SBERT (Sentence Bidirectional Encoder Representations from Transformers)

The title as well as the text body were embedded by concatenating the two together, then the 768-dimensional vector was obtained using SBERT. An accuracy of 98.6% was achieved on the suicidal ideation dataset, and was used to predict the mental stability of users in frontline worker communities based on their text posts.

To prevent any overfitting, K-Cross validation was then used, achieving an accuracy of 94.76%

VERIFICATION WITH MISCLASSIFIED POSTS

Following the prediction with LGBM, 45 misclassified posts were manually identified. Of these 45, 28 were wrongly predicted to exhibit signs of mental illness [label 0 detected as label 1]. These misclassified posts were compared with the predictions of the same posts from the other models, to gain a better understanding as to which one works best.

USE correctly classified 36 of the 45 posts, however failed when encountering null entries. With title and text, this was solved, classifying 35 posts correctly.

Despite having the best accuracy among all the models, the predictions using SBERT embeddings with the title and text weren’t as accurate as the other two, correctly classifying only 31 of the 45 posts, thus indicating overfitting. Its counterpart using K-Cross validation correctly classified 34 posts.

INSIGHTS FROM VISUALIZATION

Using Tableau, and the initial predictions on frontline posts using the supervised LGBM model, visualizations were obtained from which insights could be drawn.

This explores how the number of posts related to COVID-19 varied with time. The earliest post in the chosen subreddits occurred on January 21st:

The number of related posts increased as awareness about the novel coronavirus. The subreddit nursing overall has the greatest number of posts with keywords related to coronavirus.

All subreddits saw the frequency sharply increase between the months of March and April — coinciding with WHO declaring the outbreak to be a pandemic. This was also the most chaotic period, with countries enforcing lockdowns and frontline workers being unsure of how to proceed.

This graph outlines the number of posts which were predicted to exhibit signs of extreme mental stress. Similar to the previous visualization, the numbers peaked between the months of March and April.

This shows the number of posts made in a particular subreddit, and the proportion of those posts which were classified as showing high levels of mental stress. The highest number of posts were in the subreddit for nursing, and more than half of those posts related to COVID-19 exhibit signs of mental distress.

CONCLUSION

Using these techniques, the inherent and hidden impact of the crisis on frontline and essential workers can be identified and alerts can be raised.

CONTRIBUTORS

Snigdha Ramkumar | Intern @ Sopra Steria | Human-Centred Data Science | UX Enthusiast

Aakash Yadav | Sopra Steria | AI-ML Solutions Architect | NLP | Deep Learning Enthusiast